Hospitality giant Marriott today disclosed a massive data breach exposing the personal and financial information on as many as a half billion customers who made reservations at any of its Starwood properties over the past four years.

Marriott said the breach involved unauthorized access to a database containing guest information tied to reservations made at Starwood properties on or before Sept. 10, 2018, and that its ongoing investigation suggests the perpetrators had been inside the company’s networks since 2014.

Marriott said the intruders encrypted information from the hacked database (likely to avoid detection by any data-loss prevention tools when removing the stolen information from the company’s network), and that its efforts to decrypt that data set was not yet complete. But so far the hotel network believes that the encrypted data cache includes information on up to approximately 500 million guests who made a reservation at a Starwood property.

“For approximately 327 million of these guests, the information includes some combination of name, mailing address, phone number, email address, passport number, Starwood Preferred Guest account information, date of birth, gender, arrival and departure information, reservation date and communication preferences,” Marriott said in a statement released early Friday morning.

Marriott added that customer payment card data was protected by encryption technology, but that the company couldn’t rule out the possibility the attackers had also made off with the encryption keys needed to decrypt the data.

The hotel chain did not say precisely when in 2014 the breach was thought to have begun, but it’s worth noting that Starwood disclosed its own breach involving more than 50 properties in November 2015, just days after being acquired by Marriott. According to Starwood’s disclosure at the time, that earlier breach stretched back at least one year — to November 2014.

Back in 2015, Starwood said the intrusion involved malicious software installed on cash registers at some of its resort restaurants, gift shops and other payment systems that were not part of the its guest reservations or membership systems.

However, this would hardly be the first time a breach at a major hotel chain ballooned from one limited to restaurants and gift shops into a full-blown intrusion involving guest reservation data. In Dec. 2016, KrebsOnSecurity broke the news that banks were detecting a pattern of fraudulent transactions on credit cards that had one thing in common: They’d all been used during a short window of time at InterContinental Hotels Group (IHG) properties, including Holiday Inns and other popular chains across the United States.

It took IHG more than a month to confirm that finding, but the company said in a statement at the time it believed the intrusion was limited to malware installed at point of sale systems at restaurants and bars of 12 IHG-managed properties between August and December 2016.

In April 2017, IHG acknowledged that its investigation showed cash registers at more than 1,000 of its properties were compromised with malicious software designed to siphon customer debit and credit card data — including those used at front desks in certain IHG properties.

Marriott says its own network does not appear to have been affected by this four-year data breach, and that the investigation only identified unauthorized access to the separate Starwood network.

Starwood hotel brands include W Hotels, St. Regis, Sheraton Hotels & Resorts, Westin Hotels & Resorts, Element Hotels, Aloft Hotels, The Luxury Collection, Tribute Portfolio, Le Méridien Hotels & Resorts, Four Points by Sheraton and Design Hotels that participate in the Starwood Preferred Guest (SPG) program.

Marriott is offering affected guests in the United States, Canada and the United Kingdom a free year’s worth of service from WebWatcher, one of several companies that advertise the ability to monitor the cybercrime underground for signs that the customer’s personal information is being traded or sold.

The breach announced today is just the latest in a long string of intrusions involving credit card data stolen from major hotel chains over the past four years — with many chains experiencing multiple breaches. In October 2017, Hyatt Hotels suffered its second card breach in as many years. In July 2017, the Trump Hotel Collection was hit by its third card breach in two years.

In Sept. 2016, Kimpton Hotels acknowledged a breach first disclosed by KrebsOnSecurity. Other breaches first disclosed by KrebsOnSecurity include two separate incidents at White Lodging hotels; a 2015 incident involving card-stealing malware at Mandarin Oriental properites; and a 2015 breach affecting Hilton Hotel properties across the United States.

This is a developing story, and will be updated with analysis soon.

Last month, Wired published a long article about Ray Ozzie and his supposed new scheme for adding a backdoor in encrypted devices. It's a weird article. It paints Ozzie's proposal as something that "attains the impossible" and "satisfies both law enforcement and privacy purists," when (1) it's barely a proposal, and (2) it's essentially the same key escrow scheme we've been hearing about for decades.

Basically, each device has a unique public/private key pair and a secure processor. The public key goes into the processor and the device, and is used to encrypt whatever user key encrypts the data. The private key is stored in a secure database, available to law enforcement on demand. The only other trick is that for law enforcement to use that key, they have to put the device in some sort of irreversible recovery mode, which means it can never be used again. That's basically it.

I have no idea why anyone is talking as if this were anything new. Several cryptographers have already explained explained why this key escrow scheme is no better than any other key escrow scheme. The short answer is (1) we won't be able to secure that database of backdoor keys, (2) we don't know how to build the secure coprocessor the scheme requires, and (3) it solves none of the policy problems around the whole system. This is the typical mistake non-cryptographers make when they approach this problem: they think that the hard part is the cryptography to create the backdoor. That's actually the easy part. The hard part is ensuring that it's only used by the good guys, and there's nothing in Ozzie's proposal that addresses any of that.

I worry that this kind of thing is damaging in the long run. There should be some rule that any backdoor or key escrow proposal be a fully specified proposal, not just some cryptography and hand-waving notions about how it will be used in practice. And before it is analyzed and debated, it should have to satisfy some sort of basic security analysis. Otherwise, we'll be swatting pseudo-proposals like this one, while those on the other side of this debate become increasingly convinced that it's possible to design one of these things securely.

Already people are using the National Academies report on backdoors for law enforcement as evidence that engineers are developing workable and secure backdoors. Writing in Lawfare, Alan Z. Rozenshtein claims that the report -- and a related New York Times story -- "undermine the argument that secure third-party access systems are so implausible that it's not even worth trying to develop them." Susan Landau effectively corrects this misconception, but the damage is done.

Here's the thing: it's not hard to design and build a backdoor. What's hard is building the systems -- both technical and procedural -- around them. Here's Rob Graham:

He's only solving the part we already know how to solve. He's deliberately ignoring the stuff we don't know how to solve. We know how to make backdoors, we just don't know how to secure them.

A bunch of us cryptographers have already explained why we don't think this sort of thing will work in the foreseeable future. We write:

Exceptional access would force Internet system developers to reverse "forward secrecy" design practices that seek to minimize the impact on user privacy when systems are breached. The complexity of today's Internet environment, with millions of apps and globally connected services, means that new law enforcement requirements are likely to introduce unanticipated, hard to detect security flaws. Beyond these and other technical vulnerabilities, the prospect of globally deployed exceptional access systems raises difficult problems about how such an environment would be governed and how to ensure that such systems would respect human rights and the rule of law.

Finally, Matthew Green:

The reason so few of us are willing to bet on massive-scale key escrow systems is that we've thought about it and we don't think it will work. We've looked at the threat model, the usage model, and the quality of hardware and software that exists today. Our informed opinion is that there's no detection system for key theft, there's no renewability system, HSMs are terrifically vulnerable (and the companies largely staffed with ex-intelligence employees), and insiders can be suborned. We're not going to put the data of a few billion people on the line an environment where we believe with high probability that the system will fail.

Last week I wrote about Passwords Evolved: Authentication Guidance for the Modern Era with the aim of helping those building services which require authentication to move into the modern era of how we think about protecting accounts. In that post, I talked about NIST's Digital Identity Guidelines which were recently released. Of particular interest to me was the section advising organisations to block subscribers from using passwords that have previously appeared in a data breach. Here's the full excerpt from the authentication & lifecycle management doc (CSP is "Credential Service Provider"):

NIST isn't mincing words here, in fact they're quite clearly saying that you shouldn't be allowing people to use a password that's been breached before, among other types of passwords they shouldn't be using. The reasons for this should be obvious but just in case you're not fully aware of the risks, have a read of my recent post on password reuse, credential stuffing and another billion records in Have I been pwned (HIBP). As I read NIST's guidance, I realised I was in a unique position to help do something about the problem they're trying to address due to the volume of data I've obtained in running HIBP. Others picked up on this too:

It would be exceptionally helpful if @troyhunt could share anonymized passwords for this purpose.

— scriptjunkie (@scriptjunkie1) June 23, 2017

This blog post introduces a new service I call "Pwned Passwords", gives you guidance on how to use it and ultimately, provides you with 306 million passwords you can download for free and use to protect your own systems. If you're impatient you can go and play with it right now, otherwise let me explain what I've created.

Where Are the Passwords From?

Before I go any further, I've always been pretty clear about not redistributing data from breaches and this doesn't change that one little bit. I'll get into the nuances of that shortly but I wanted to make it crystal clear up front: I'm providing this data in a way that will not disadvantage those who used the passwords I'm providing. As such, they're not in clear text and whilst I appreciate that will mean some use cases aren't feasible, protecting the individuals still using these passwords is the first priority.

I've aggregated these passwords from a variety of different sources, starting with the massive combo lists I wrote about in May. These contain all the sorts of terrible passwords you'd expect from real world examples and you can read an analysis in BinaryEdge's post on how users are choosing their passwords on the internet. I began with the Exploit.in list which has 805,499,391 rows of email address and plain text password pairs. That actually "only" had 593,427,119 unique email addresses in it so what we're seeing here is a heap of email accounts with more than one password. This is the reality of these combo lists: they're often providing multiple different alternate passwords which could be used to break into the one account.

I grabbed the passwords from the Exploit.in list which gave me 197,602,390 unique values. Think about this for a moment: 75% of the passwords in that one data set had been used more than once. This is really important as it starts to put shape around the scale of the problem we're facing.

I moved on to the Anti Public list which contained 562,077,488 rows with 457,962,538 unique email addresses. This gave me a further 96,684,629 unique passwords not already in the Exploit.in data. Looking at it the other way, 83% of the passwords in that set had already been seen before. This is entirely expected: as more data is added, a smaller proportion of the passwords are previously unseen.

From there, I moved through a variety of other data sources adding more and more passwords albeit with a steadily decreasing rate of new ones appearing. I was adding sources with tens of millions of passwords and finding "only" a 6-figure number of new ones. Whilst you could say that the data I'm providing is largely comprised of those two combo lists, you could also say that once you have hundreds of millions of passwords, new data breaches are simply not turning up too much stuff we haven't already seen. (Keep that last point in mind for when I later talk about updates.)

When I was finished, there were 306,259,512 unique Pwned Passwords in the set. Let's talk about how you can now use them.

Checking Passwords Online

For quite some time now, I've had suggestions along the lines of that earlier tweet saying "you should build a service for websites to check passwords against when customers sign up". I want to explain why this is a bad idea, why I've done it anyway and why that's not how you should use the service.

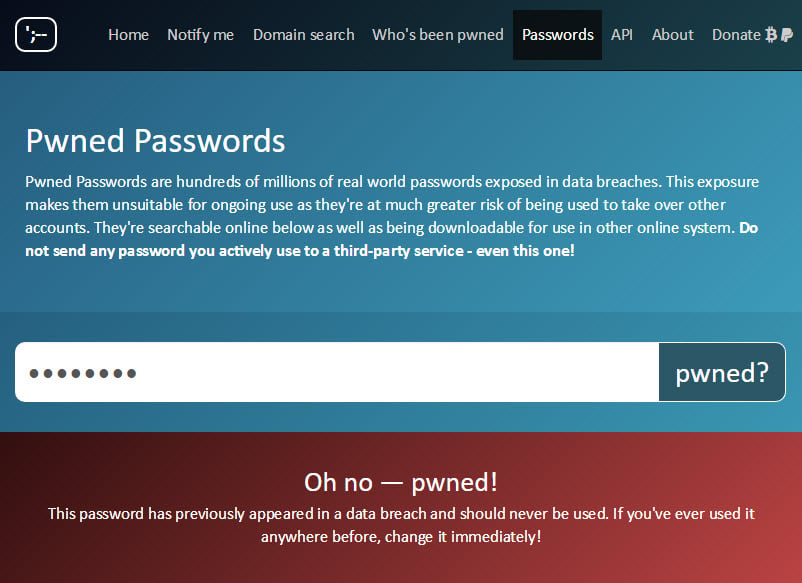

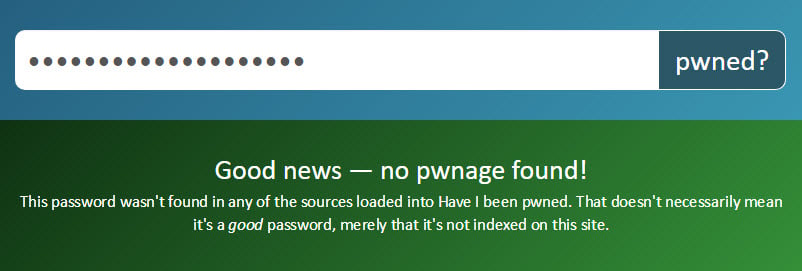

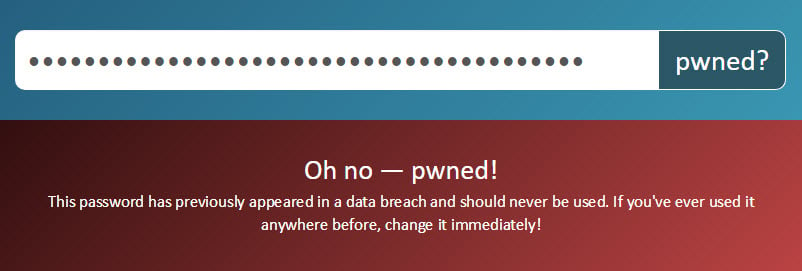

To the first point, there is now a link on the nav of HIBP titled Passwords. On that page, there's a search box where you can enter a password and it will tell you if it exists on the service. For example, if you test the password "p@55w0rd":

It goes without saying (although I say it anyway on that page), but don't enter a password you currently use into any third-party service like this! I don't explicitly log them and I'm a trustworthy guy but yeah, don't. The point of the web-based service is so that people who have been guilty of using sloppy passwords have a means of independent verification that it's not one they should no longer be using. Mind you, someone could actually have an exceptionally good password but if the website stored it in plain text then leaked it, that password has still been "burned".

If a password is not found in the Pwned Passwords set, it'll result in a response like this:

My hope is that an easily accessible online service like this also partially addresses the age-old request I've had to provide email address and password pairs; if the password alone comes back with a hit on this service, that's a very good reason to no longer use it regardless of whose account it originally appeared against.

As well people checking passwords they themselves may have used, I'm envisaging more tech-savvy people using this service to demonstrate a point to friends, relatives and co-workers: "you see, this password has been breached before, don't use it!" If this one thing I've learned over the years of running this service, it's that nothing hits home like seeing your own data pwned.

To give people more options, they can also search for a SHA1 hash of the password. Taking the password "p@55w0rd" example from earlier on, a search for "ce0b2b771f7d468c0141918daea704e0e5ad45db" (the hash itself is not case sensitive so "CE0B..." is fine too) yields the same result:

The service auto-detects SHA1 hashes in the web UI so if your actual password was a SHA1 hash, that's not going to work for you. This is where you need the API which is per the existing APIs on the service, is fully documented. Using this you can perform a search as follows:

And as for that "but the actual password I want to search for is a SHA1 hash" scenario, you can always call the API as follows:

GET https://haveibeenpwned.com/api/v2/pwnedpassword/ce0b2b771f7d468c0141918daea704e0e5ad45db?originalPasswordIsAHash=true

That will actually return a 404 as nobody used the hash of "p@55w0rd" as their actual password (at least if they did, it hasn't appeared in plain text or was readily crackable). There's no response body when hitting the API, just 404 when the password isn't found and 200 when it is, for example when just searching for "p@55w0rd" via its hash:

GET https://haveibeenpwned.com/api/v2/pwnedpassword/ce0b2b771f7d468c0141918daea704e0e5ad45db

Just like the other APIs on HIBP, the Pwned Passwords service fully supports CORS so if you really did want to integrate it into a web front end somewhere, you can (I suggest sending only a SHA1 hash if you want to do that, at least it's some additional protection). Also like the other APIs, it's rate limited to one request every 1,500ms per IP address. This is heaps for legitimate web-based use cases.

One quick caveat on the search feature: absence of evidence is not evidence of absence or in other words, just because a password doesn't return a hit doesn't mean it hasn't been previously exposed. For example, the password I used on Dropbox is out there as a bcrypt hash and given it's a randomly generated string out of 1Password, it's simply not getting cracked. I say this because some people will inevitably say "I was in the XX breach and used YY password but your service doesn't say it was pwned". Now you know why!

So that's the online option but again, don't use this for anything important in terms of actual passwords, there's a much better way.

Checking Passwords Offline

The entire collection of 306 million hashed passwords can be directly downloaded from the Pwned Passwords page. It's a single 7-Zip file that's 5.3GB which you can then download and extract into whatever data structure you want to work with (it's 11.9GB once expanded). This allows you to use the passwords in whatever fashion you see fit and I'll give you a few sample scenarios in a moment.

Providing data in this fashion wasn't easy, primarily due to the size of the zip file. Actually, let me rephrase that: it wouldn't be easy if I wanted to do it without spending a heap for other people to download the data! I asked for some advice on this whilst preparing the service:

What's a cheap way of hosting a 6GB file for a heap of people to download? Don't want to torrent and don't mind paying a *little*

— Troy Hunt (@troyhunt) July 20, 2017

There were lots of well-intentioned suggestions which wouldn't fly. For example, Dropbox and OneDrive aren't intended for sharing files with a large audience and they'll pull your ability to do so if you try (believe me). Hosting models which require me to administer a server are also out as that's a bunch of other responsibility I'm unwilling to take on. Lots of people pointed to file hosting models where the storage was cheap but then the bandwidth stung so those were out too. Backblaze's B2 was the most cost effective but at 2c a GB for downloads, I could easily see myself paying north of a thousand dollars over time. Amazon has got a neat Requestor Pays Feature but as soon as there's a cost - any cost - there's a barrier to entry. In fact, both this model and torrenting it were out because they make access to data harder; many organisations block torrents (for obvious reasons) and I know, for example, that either of these options would have posed insurmountable hurdles at my previous employment. (Actually, I probably would have ended up just paying for it myself due to the procurement challenges of even a single-digit dollar amount, but let's not get me started on that!)

After that tweet, I got several offers of support which was awesome given it wasn't even clear what I was doing! One of those offers came from Cloudflare who I've written about many times before. I'm a big supporter of what they do for all the sorts of reasons mentioned in those posts, plus their offer of support would mean the data would be aggressively cached in their 115 edge nodes around the world. What this means over and above simple hosting of the files itself is that downloads should be super fast for everyone because it's always being served from somewhere very close to them. The source file actually sits in Azure blob storage but regardless of how many times you guys download it, I'll only see a few requests a month at most. So big thanks to Cloudflare for not just making this possible in the first place, but for making it a better experience for everyone.

So that's the data and where to get it, let's now talk about the hashes.

Why Hashes?

Sometimes passwords are personally identifiable. Either they contain personal info (such as kids' names and birthdays) or they can even be email addresses. One of the most common password hints in the Adobe data breach (remember, they leaked hints in clear text), was "email" so you see the challenge here.

Further to that, if I did provide all the passwords in clear text fashion then it opens up the risk of them being used as a source to potentially brute force accounts. Yes, some people will be able to sniff out the sources of a large number of them in plain text if they really want to, but as with my views on protecting data breaches themselves, I don't want to be the channel by which this data is spread further in a way that can do harm. I'm hashing them out of "an abundance of caution" and besides, for the use cases I'm going to talk about shortly, they don't need to be in plain text format anyway.

Each of the 306 million passwords is being provided as a SHA1 hash. What this means is that anyone using this data can take a plain text password from their end (for example during registration, password change or at login), hash it with SHA1 and see if it's previously been leaked. It doesn't matter that SHA1 is a fast algorithm unsuitable for storing your customers' passwords with because that's not what we're doing here, it's simply about ensuring the source passwords are not immediately visible.

Also, just a quick note on the hashes: I processed all the passwords in a SQL Server DB then dumped out the hashes using the HASHBYTES function which represents them in uppercase. If you're comparing these to hashes on your end, make sure you either generate your hashes in uppercase or do a case insensitive comparison.

Let's go through a few different use cases of how I'm hoping this data can be employed to do good things.

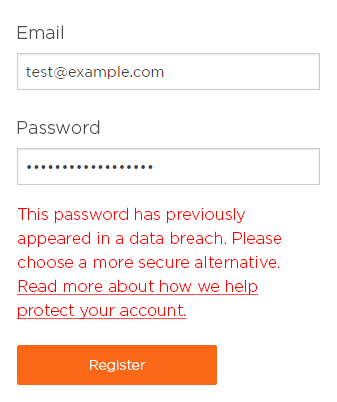

Use Case 1: Registration

At the point of registration, the user-provided password can be checked against the Pwned Passwords list. If a match is found, there are 2 likely explanations for what's happened:

- This is a password the user has previously used and it has been pwned in a data breach. It may even be a very good password strength wise, but it should now be considered "burned".

- This is a password someone else has used and it has been pwned in a data beach. This is almost certainly a poor password choice as someone else has independently chosen the same string of characters.

Both scenarios ultimately mean the same thing - the password has previously been used, exposed and is circulating amongst nefarious parties with criminal intent. Let's go back to NIST's advice for a moment in terms of how to handle this:

If the chosen secret is found in the list, the CSP or verifier SHALL advise the subscriber that they need to select a different secret, SHALL provide the reason for rejection, and SHALL require the subscriber to choose a different value.

This is one possible path to take in that you simply reject the registration and ask the user to create another password. Per NIST's guidance though, do explain why the password has been rejected:

This has a usability impact. From a purely "secure all the things" standpoint, you should absolutely take the above approach but there will inevitably be organisations that are reluctant to potentially lose the registration as a result of pushing back. I also suggest having an easily accessible link to explain why the password has been rejected. You and I know what a data breach is but it's a foreign world to many other people so some language the masses can understand (including why it's in their own best interests) is highly recommended.

A middle ground would be to recommend the user create a new password without necessarily enforcing this action. The obvious risk is that the user clicks through the warning and proceeds with using a compromised password, but at least you've given them the opportunity to improve their security profile.

There should not be a "one size fits all" approach here. Consider the risk in the context of what it is you're protecting and whilst that means that yes, there are cases where you certainly shouldn't allow the passwords, there are also cases where the damage would be much less and some more leeway might be granted.

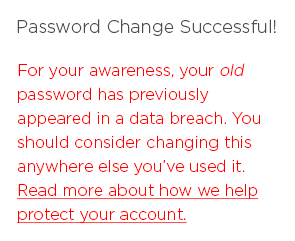

Use Case 2: Password Change

Think back to that earlier NIST guidance:

When processing requests to establish and change memorized secrets

Password change is important as it obviously presents another opportunity for users to make good (or bad) decisions. But it's a little different to registration for a couple of reasons. One reason is that it presents an opportunity to do the following:

Here you can do some social good; we know how much passwords are reused and the reality of it is that if they've been using that password on one service, they've probably been using it on others too. Giving people a heads up that even an outgoing password was a poor choice may well help save them from grief on a totally unrelated website.

Clearly, the new password should also be checked against the list and as per the previous use case at registration, you could either block a Pwned Password entirely or ask the user if they're sure they want to proceed. However, in this use case I'd be more inclined to err towards blocking it simply because by now, the user is already a customer. The argument of "let's not do anything to jeopardise signups" is no longer valid and whilst I'd be hesitant to say "always block Pwned Passwords at change", I'd be more inclined to do it here than anywhere else.

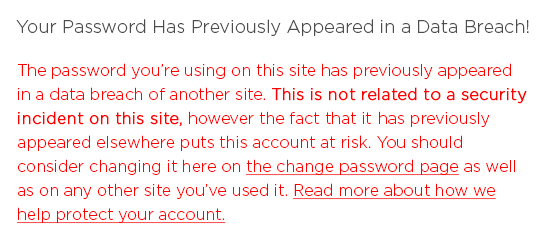

Use Case 3: Login

Many systems will already have large databases of users. Many of them have made poor password choices stretching all the way back to registration, an event that potentially occurred many years ago. Whilst that password remains in use, anyone using it faces a heightened risk of account takeover which means doing something like this makes a lot of sense:

I suggest being very clear that there has not been a security incident on the site they're logging into and that the password was exposed via a totally unrelated site. You wouldn't need to do this every single time someone logs in, just the first time since implementing the feature after which you could flag the account as checked and not do so again. You'd definitely want to make sure this is an expeditious process too; 306 million records in a poorly indexed database with many people simultaneously logging on wouldn't make for a happy user experience! An approach as I've taken with Azure Table Storage would be ideal in that it's very fast (single digit ms), very scalable and very cost effective.

Other Use Cases

I'm sure clever people will come up with other ways of using this data. Perhaps, for example, a Pwned Password is only allowed if multi-step verification is enabled. Maybe there are certain features of the service that are not available if the password has a hit on the pwned list. Or consider whether you could even provide an incentive if the user proactively opts to change a Pwned Password after being prompted, for example the way MailChimp provide an incentive to enabled 2FA:

Love this - just paid @MailChimp some money and got a 10% discount because I have 2FA enabled 😎 pic.twitter.com/3cgFb68VG4

— Troy Hunt (@troyhunt) June 30, 2017

The thing about protecting people in this fashion is that it doesn't just reduce the risk of bad things happening to them, it also reduces the burden on the organisation holding credentials that have already been compromised. Increasingly, services are becoming more and more aware of this value and I'm seeing instances of this every day. This one just last week from Spirit Airlines, for example:

@troyhunt should I be worried? Already check haveibeenpwned and know some decade old combos still exist out in the wild, but this is new pic.twitter.com/EQWkSsHldm

— Mallard Phallus (@mkane848) July 28, 2017

Or a couple of days before that, this one from Freelancer:

wasn't breached in any recent leak but still nice @troyhunt pic.twitter.com/C2nQD9UHcd

— A (@hej) July 27, 2017

I particularly like the way they mention HIBP :) In fact, this approach was quite well-received and they got themselves a writeup on Gizmodo for their efforts. So you can see the point I'm making: increasingly, organisations are using breached data to do good things whether that be from mining data breaches directly themselves, monitoring for email address exposure (a number of organisations actually use HIBP commercially to do this), or as I hope, downloading these 306 million Pwned Passwords and stopping them from doing any more harm.

If you have other ideas on how to use this data and particularly if you use it in the way I'm hoping organisations do, please leave a comment below. My genuine hope is that this initiative helps drive positive change but given the way it'll be downloaded and used, I'll have no direct visibility into its uses so I'm relying on people to let me know.

Augment Pwned Passwords with Other Approaches

The 306 million passwords in this list obviously represents a really comprehensive set of strings that shouldn't be used as passwords, but it's not exhaustive and nor can it ever be. For example, the earlier screen cap from NIST also says that you shouldn't allow the following:

Context-specific words, such as the name of the service, the username, and derivatives thereof

If your service is called "Jim's Drone Hire", you shouldn't allow a password of JimsDroneHire. Or J1m5Dr0n3H1r3. Or and other combination people may try. They won't be in the list of Pwned Passwords but you still shouldn't allow them.

You also should still use implementations such as Dropbox's zxcvbn. This includes 47k common passwords and runs client side so it can give immediate feedback as people are entering a password. Every one of those passwords is also included in the Pwned Passwords list so the server side validation is already covered if you're using the list I've provided here. (Incidentally, more than 99% of them had already appeared in data breaches loaded into the Pwned Passwords list.)

Updates, Attribution and Donations

As for updates, when a "significant" volume of new passwords becomes available I'll update the data. I'm not putting a number on what "significant" constitutes (I'll cross that bridge when I get to it), and it will likely be provided as a delta that can be easily added to the existing data set. But the reality is that 306 million passwords already represents a huge portion of the passwords people regularly use, a fact that was made abundantly clear as I built out the data set and found a decreasing number of new passwords not already in the master list.

In terms of attribution, you're free to use the Pwned Passwords without identifying HIBP as the source, simply because I want to remove every possible barrier to use. As I mentioned earlier, I know how corporate environments in particular can put up barriers around the most inane things and I don't want the legal department to stop something that's in everybody's best interests. Of course, I'm happy if you do want to attribute HIBP as the source of the data, but you're under no obligation to do so.

As I mentioned earlier, I've been able to host and provide this data for free courtesy of Cloudflare. There's (almost) no cost to me to host it, none to distribute it and indeed none to acquire it in the first place (I have a policy of never paying for data - the last thing we need is people being financially incentivised to hack websites). The only cost to me has been time and I've already got a great donation page on HIBP if you'd like to contribute towards that by buying me a coffee or some beer. I'm enormously grateful to those who do :)

Summary

There will be those within organisations that won't be too keen on the approaches above due to the friction it presents to some users. I've written before about the attitude of people with titles like "Marketing Manager" where there can be a myopic focus on usability whilst serious security incidents remain "a hypothetical risk". If you're wearing the same shoes as I have so many times before where you're trying to make yourself heard and do what you ultimately believe is in the organisation's best interests, let me give you a couple of suggestions:

- Offer to "downsample" the users you apply this to over a trial period. For example, take just 10% of the logins, check them against Pwned Passwords and show the prompt I suggest above then measure the behaviour (i.e. how many then change their passwords).

- Just passively collect data in a "phase one" approach. See how many of the registrations, password changes and logins match the Pwned Passwords list and collect aggregated stats (no, don't log the password itself!) Use this data to then have an evidence-based discussion about the risk to the organisation.

Use this data to do good things. Take it as an opportunity to not just reduce the risk to the service you're involved in running, but also to help make people aware of the broader risks they face due to their password management practices. When someone gets a "hit" on a Pwned Password, help them understand the broader risk profile and what this means to their personal security. One thing that's really hit home while running HIBP is that few things resonate with people like demonstrating that they've been pwned. I can do that with those who come to the site and enter their email address but by providing these 306 million Pwned Passwords, my hope is that with your help, I can distribute that "lightbulb moment" out to a far greater breadth of people.